Nos Publications

2025

Applied Mathematics and Computation, 2025, vol. 490, p. 129173, 2025

abstract

Abstract

In this paper, we propose a new class of second-order one-step time-splitting schemes for scalar and systems of conservation laws. The strategy is based on a relaxation approximation which consists in introducing a relaxation system with linearly degenerate characteristic fields to approximate the original system and deal with its nonlinearities. The numerical schemes are based on the use of arbitrarily high-order approximations of the underlying linear advection equations. Numerical evidence is proposed to illustrate the behaviour of our schemes.

International Journal for Numerical Methods in Engineering, Volume126, e70051, 2025

abstract

Abstract

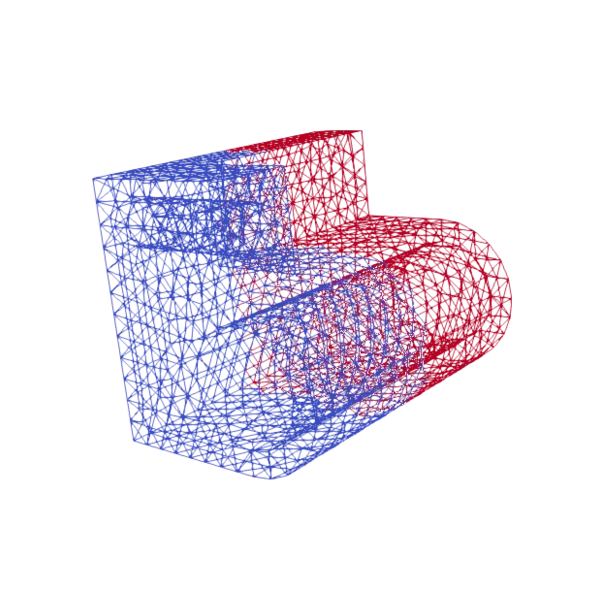

In this article, we propose and investigate an explicit partitioned method for solving shock dynamics in fluid–structure interaction (FSI) problems. The method is fully conservative, ensuring the local conservation of mass, momentum, and energy, which is crucial for accurately capturing strong shock interactions. Using an updated-Lagrangian finite-volume approach, the method integrates a subcycling strategy to decouple time steps between the fluid and structure, significantly enhancing computational efficiency. Numerical experiments confirm the accuracy and stability of the method, demonstrating that it retains the key properties of monolithic solvers while reducing computational costs. Extensive validation across 1D and 3D FSI problems shows the method's capability for large-scale, fast transient simulations, making it a promising solution for high-performance applications.

Computers & Fluids, Volume 297, 106648, 2025

abstract

Abstract

In this paper, we present an extension to non-uniform meshes of a 1D scheme [Rémi Abgrall and Davide Torlo. “Some preliminary results on a high order asymptotic preserving computationally explicit kinetic scheme”. In: Abgrall and Torlo (2022). This scheme is arbitrary high order convergent in space and time for any hyperbolic system of conservation laws. It is based on a Finite Difference technique. We show that this numerical method is not conservative but it satisfies a Lax–Wendroff theorem under restrictive conditions on the mesh. To relax this condition we propose a Finite Volume alternative. This new discretization can be seen as a direct generalization to non-uniform meshes of the Finite Difference schemes in the sense that the fluxes of both methods are the same on uniform meshes. We apply the two schemes to the Euler system and we assess their performances on regarding test problems of the literature.

2024

2024

abstract

Abstract

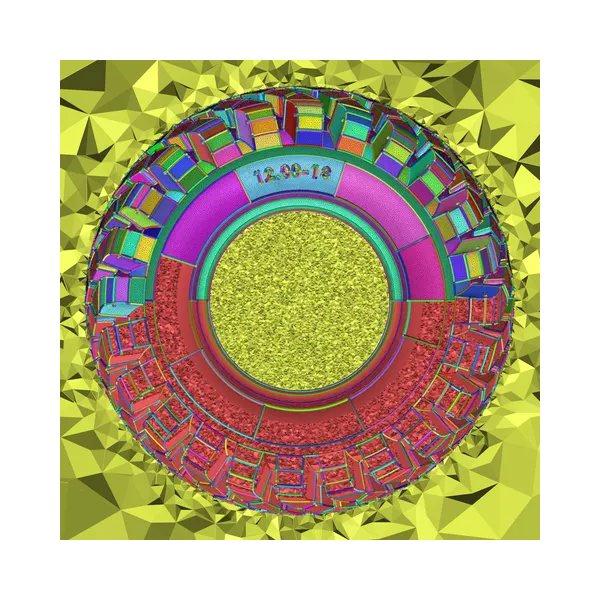

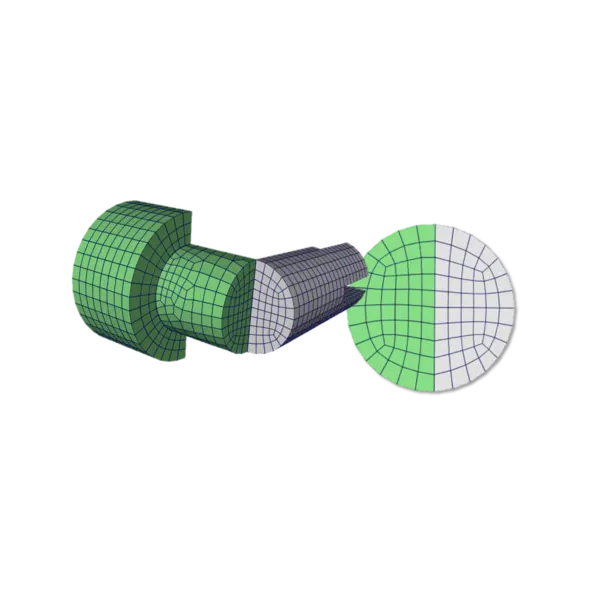

This animation has been realized using ParaView [1] and aims to demonstrate the different steps of an algorithm developed to generate block-structured meshes suitable for Computational Fluid Dynamics simulations of flows around vehicles during atmospheric re-entry. This method takes a tetrahedral mesh of the domain as input and the quadrangular block discretization of the vehicle surface. A linear blocking is obtained using an advancing front algorithm. This means it is incrementally created from the vehicle surface, layer by layer. Some distance and vector fields are computed on the tetrahedral mesh to lead the block's extrusion direction. Then, the linear blocking is curved, and the final mesh is generated. The algorithm is freely available and implemented in the open-source C++ meshing framework GMDS [2,3]. The algorithm is based on the previous work of Ruiz-Gironés et al. [4,5]. Details of the algorithm are available in [6,7]. Here, the algorithm generates a mesh around a vehicle with two wings. This block structure is generated on four different layers. The creation of the first layer of blocks is shown here, block by block. [1] https://www.paraview.org/ [2] https://github.com/LIHPC- Computational- Geometry/gmds [3] Franck Ledoux, Jean-Claude Weill, and Yves Bertrand. Gmds: A generic mesh data structure. 17th International Meshing Roundtable, 2008. [4] Eloi Ruiz-Gironés. Automatic hexahedral meshing algorithms: from structured to unstructured meshes. PhD thesis, Universitat Politècnica de Catalunya (UPC), 2011. [5] Eloi Ruiz-Gironés, Xevi Roca, and Josep Sarrate. The receding front method applied to hexahedral mesh generation of exterior domains. Engineering with computers, 28(4):391–408, 2012. [6] Claire Roche, Jérôme Breil, Simon Calderan, Thierry Hocquellet, and Franck Ledoux. Curved hexahedral block structure generation by advancing front. In SIAM International Meshing Roundtable Workshop 2024 (SIAM IMR24), 2024. [7] Claire Roche, Jérôme Breil, Thierry Hocquellet, and Franck Ledoux. Block-structured quad meshing for supersonic flow simulations. In SIAM International Meshing Roundtable Workshop 2023 (SIAM IMR23), Amsterdam, The Netherlands, March 2023.

European MPI Users' Group Meeting, 2024

abstract

Abstract

The evolution of parallel computing architectures presents new challenges for developing efficient parallelized codes. The emergence of heterogeneous systems has given rise to multiple programming models, each requiring careful adaptation to maximize performance. In this context, we propose reevaluating memory layout designs for computational tasks within larger nodes by comparing various architectures. To gain insight into the performance discrepancies between shared memory and shared-address space settings, we systematically measure the bandwidth between cores and sockets using different methodologies. Our findings reveal significant differences in performance, suggesting that MPI running inside UNIX processes may not fully utilize its intranode bandwidth potential. In light of our work in the MPC thread-based MPI runtime, which can leverage shared memory to achieve higher performance due to its optimized layout, we advocate for enabling the use of shared memory within the MPI standard.

15th International Conference on Parallel Processing & Applied Mathematics, 2024

abstract

Abstract

Breaking down the parallel time into work, idleness, and overheads is crucial for assessing the performance of HPC applications, but difficult to measure in asynchronous dependent tasking runtime systems. No existing tools allow its measurement portably and accurately. This paper introduces POT: a tool-suite for dependent task-based applications performance measurement. We focus on its low-disturbance methodology consisting of task modeling, discrete-event tracing, and post-mortem simulation-based analysis. It supports the OMPT standard OpenMP specifications. The paper evaluates the precision of POT's parallel time breakdown analysis on LLVM and MPC implementations and shows that measurement bias may be neglected above 16µs workload per task, portably across two architectures and OpenMP runtime systems

HPCAsia 2024 Workshops Proceedings of the International Conference on High Performance Computing in Asia-Pacific Region Workshops, 2024

abstract

Abstract

The adoption of ARM processor architectures is on the rise in the HPC ecosystem. Fugaku supercomputer is a homogeneous ARMbased machine, and is one among the most powerful machine in the world. In the programming world, dependent task-based programming models are gaining tractions due to their many advantages like dynamic load balancing, implicit expression of communication/computation overlap, early-bird communication posting,... MPI and OpenMP are two widespreads programming standards that make possible task-based programming at a distributed memory level. Despite its many advantages, mixed-use of the standard programming models using dependent tasks is still under-evaluated on large-scale machines. In this paper, we provide an overview on mixing OpenMP dependent tasking model with MPI with the state-of-the-art software stack (GCC-13, Clang17, MPC-OMP). We provide the level of performances to expect by porting applications to such mixed-use of the standard on the Fugaku supercomputers, using two benchmarks (Cholesky, HPCCG) and a proxy-application (LULESH). We show that software stack, resource binding and communication progression mechanisms are factors that have a significant impact on performance. On distributed applications, performances reaches up to 80% of effiency for task-based applications like HPCCG. We also point-out a few areas of improvements in OpenMP runtimes.

Computers & Mathematics with Applications, Volume 158, Pages 56-73, ISSN 0898-1221, 2024

abstract

Abstract

We propose in this article a monotone finite volume diffusion scheme on 3D general meshes for the radiation hydrodynamics. Primary unknowns are averaged value over the cells of the mesh. It requires the evaluation of intermediate unknowns located at the vertices of the mesh. These vertex unknowns are computed using an interpolation method. In a second step, the scheme is made monotone by combining the computed fluxes. It allows to recover monotonicity, while making the scheme nonlinear. This scheme is inserted into a radiation hydrodynamics solver and assessed on radiation shock solutions on deformed meshes.

Proceedings of the 2024 International Meshing Roundtable (IMR), 2024

abstract

Abstract

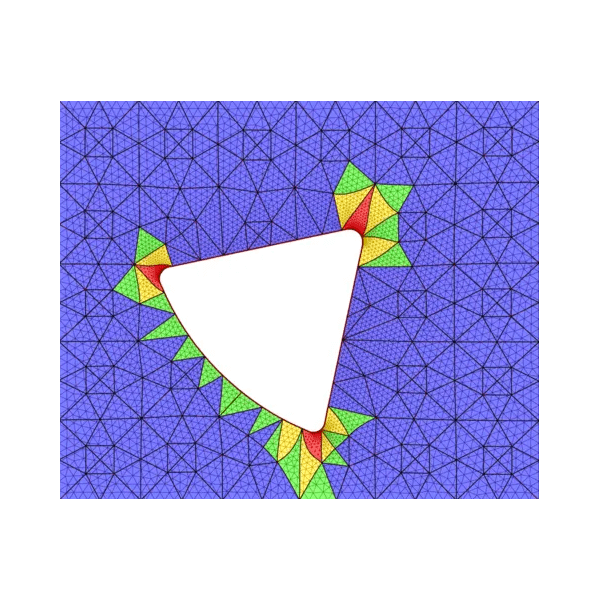

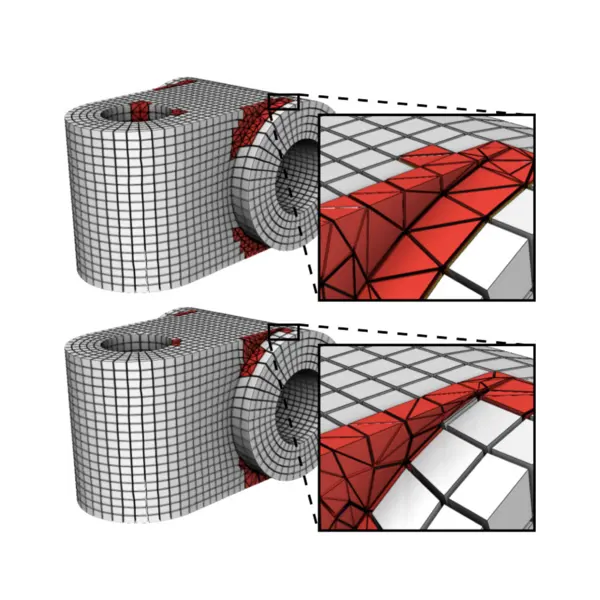

Computational analysis with the finite element method requires geometrically accurate meshes. It is well known that high-order meshes can accurately capture curved surfaces with fewer degrees of freedom in comparison to low-order meshes. Existing techniques for high-order mesh generation typically output meshes with same polynomial order for all elements. However, high order elements away from curvilinear boundaries or interfaces increase the computational cost of the simulation without increasing geometric accuracy. In prior work [5, 21], we have presented one such approach for generating body-fitted uniform-order meshes that takes a given mesh and morphs it to align with the surface of interest prescribed as the zero isocontour of a level-set function. We extend this method to generate mixed-order meshes such that curved surfaces of the domain are discretized with high-order elements, while low-order elements are used elsewhere. Numerical experiments demonstrate the robustness of the approach and show that it can be used to generate mixed-order meshes that are much more efficient than high uniform-order meshes. The proposed approach is purely algebraic, and extends to different types of elements (quadrilaterals/triangles/tetrahedron/hexahedra) in two- and three-dimensions.

Journal of Computational Physics, Volume 518, 2024, 113325, ISSN 0021-9991, 2024

abstract

Abstract

Monotonicity is very important in most applications solving elliptic problems. Many schemes preserving positivity has been proposed but are at most second-order convergent. Besides, in general, high-order schemes do not preserve positivity. In the present paper, we propose an arbitrary-order monotonic method for elliptic problems in 2D. We show how to adapt our method to the case of a discontinuous and/or tensorvalued diffusion coefficient, while keeping the order of convergence. We assess the new scheme on several test problems.

Thèse de Doctorat de l'Université Paris-Saclay, 2024

abstract

Abstract

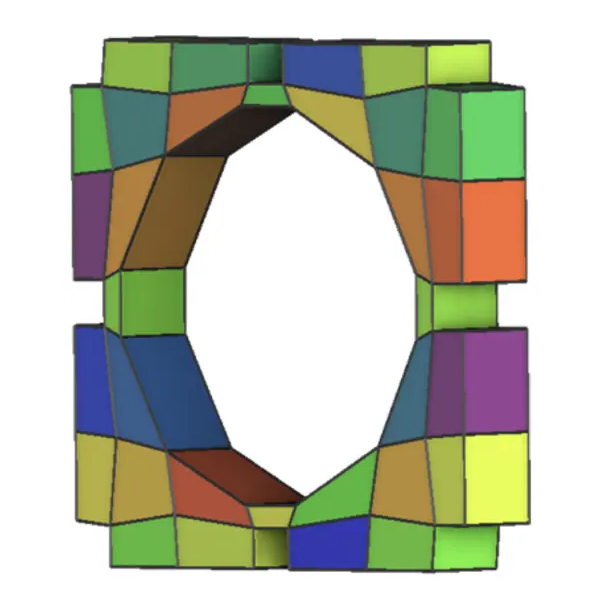

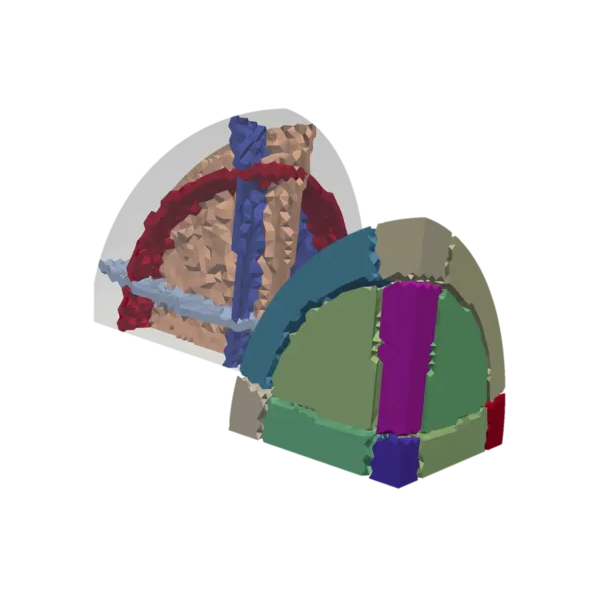

Ce travail de thèse porte sur la représentation et la génération de maillages hexaédriques structurés par blocs. Il n'existe pas à ce jour de méthode permettant de générer des structures de blocs satisfaisantes pour n'importe quel domaine géométrique. En pratique, des ingénieurs experts génèrent ces maillages avec des logiciels interactifs, ce qui nécessite parfois plusieurs semaines de travail. De plus, l'ajout d'opérations de modification dans ces logiciels interactifs est un travail délicat pour maintenir la cohérence de la structure de blocs et sa relation avec le domaine géométrique à discrétiser. Afin d'améliorer ce processus, nous proposons tout d'abord de définir des opérations de manipulation de maillages hexaédriques se basant sur l'utilisation du modèle des cartes généralisées. Ensuite, en considérant des structures de blocs obtenues à l'aide de la méthode des Polycubes, nous fournissons des méthodes optimisant la topologie de ces structures pour satisfaire des contraintes de nature géométrique. Nous proposons ainsi une première méthode en dimension 2, qui considère une approche locale du problème en s'appuyant sur l'expérience des ingénieurs manipulant des logiciels interactifs. Puis nous proposons une seconde méthode utilisant cette fois la méta-heuristique d'optimisation par colonie de fourmis pour la sélection de feuillets en dimension 3.

SIAM IMR24-SIAM International Meshing Roundtable Workshop, 2024

abstract

Abstract

This work aims to provide a method to generate block-structured meshes suitable for Computational Fluid Dynamics (CFD) simulations of flows around vehicles during atmospheric re-entry. This method takes as input a tetrahedral mesh of the domain, and the quadrangular block discretization of the vehicle surface. A linear blocking is obtained using an advancing front algorithm. This means it is incrementally created from the vehicle surface, layer by layer. Then, this linear blocking is curved, and we generate the final mesh. Some results of blocking and corresponding meshes generated with our algorithm are shown.

ISPRS Open Journal of Photogrammetry and Remote Sensing, 2024

abstract

Abstract

CNES is currently carrying out a Phase A study to assess the feasibility of a future hyperspectral imaging sensor (10 m spatial resolution) combined with a panchromatic camera (2.5 m spatial resolution). This mission focuses on both high spatial and spectral resolution requirements, as inherited from previous French studies such as HYPEX, HYPXIM, and BIODIVERSITY. To meet user requirements, cost, and instrument compactness constraints, CNES asked the French hyperspectral Mission Advisory Group (MAG), representing a broad French scientific community, to provide recommendations on spectral sampling, particularly in the Short Wave InfraRed (SWIR) for various applications. This paper presents the tests carried out with the aim of defining the optimal spectral sampling and spectral resolution in the SWIR domain for quantitative estimation of physical variables and classification purposes. The targeted applications are geosciences (mineralogy, soil moisture content), forestry (tree species classification, leaf functional traits), coastal and inland waters (bathymetry, water column, bottom classification in shallow water, coastal habitat classification), urban areas (land cover), industrial plumes (aerosols, methane and carbon dioxide), cryosphere (specific surface area, equivalent black carbon concentration), and atmosphere (water vapor, carbon dioxide and aerosols). All the products simulated in this exercise used the same CNES end-to-end processing chain, with realistic instrument parameters, enabling easy comparison between applications. 648 simulations 68 were carried out with different spectral strategies, radiometric calibration performances and signal-to-noise Ratios (SNR): 24 instrument configurations ´ 25 datasets (22 images + 3 spectral libraries). The results show that a 16/20 nm spectral sampling in the SWIR domain is sufficient for most applications. However, 10 nm spectral sampling is recommended for applications based onspecific absorption bands such as mineralogy, industrial plumes or atmospheric gases. In addition, a slight performance loss is generally observed when radiometric calibration accuracy decreases, with a few exceptions in bathymetry and in the cryosphere for which the observed performance is severely degraded. Finally, most applications can be achieved with the lowest SNR, with the exception of bathymetry, shallow water classification, as well as carbon dioxide and methane estimation, which require the higher SNR level tested. On the basis of these results, CNES is currently evaluating the best compromise for designing the future hyperspectral sensor to meet the objectives of priority applications.

Proceedings of the 21st ACM International Conference on Computing Frontiers: Workshops and Special Sessions, Association for Computing Machinery, p. 94-100, 2024

abstract

Abstract

The new emerging scientific workloads to be executed in the upcoming exascale supercomputers face major challenges in terms of storage, given their extreme volume of data. In particular, intelligent data placement, instrumentation, and workflow handling are central to application performance. The IO-SEA project developed multiple solutions to aid the scientific community in adressing these challenges: a Workflow Manager, a hierarchical storage management system, and a semantic API for storage. All of these major products incorporate additional minor products that support their mission. In this paper, we discuss both the roles of all these products and how they can assist the scientific community in achieving exascale performance.

Euro-Par 2024: Parallel Processing, Springer Nature Switzerland, p. 77-90, 2024

abstract

Abstract

With the advent of heterogeneous systems that combine CPUs and GPUs, designing a supercomputer becomes more and more complex. The hardware characteristics of GPUs significantly impact the performance. Choosing the GPU that will maximize performance for a limited budget is tedious because it requires predicting the performance on a non-existing hardware platform.

Microprocessors and Microsystems, Volume 110, October 2024, 105102, 2024

abstract

Abstract

RED-SEA is a H2020 EuroHPC project, whose main objective is to prepare a new-generation European Interconnect, capable of powering the EU Exascale systems to come, through an economically viable and technologically efficient interconnect, leveraging European interconnect technology (BXI) associated with standard and mature technology (Ethernet), previous EU-funded initiatives, as well as open standards and compatible APIs. To achieve this objective, the RED-SEA project is being carried out around four key pillars: (i) network architecture and workload requirements-interconnects co-design – aiming at optimizing the fit with the other EuroHPC projects and with the EPI processors; (ii) development of a high-performance, low-latency, seamless bridge with Ethernet; (iii) efficient network resource management, including congestion and Quality-of-Service; and (iv) end-to-end functions implemented at the network edges. This paper presents key achievements and results at the midterm of the project for each key pillar in the way to reach the final project objective. In this regard we can highlight: (i) The definition of the network requirements and architecture as well as a list of benchmarks and applications; (ii) In addition to initially planned IPs progress, BXI3 architecture has evolved to support natively Ethernet at low level, resulting in reduced complexity, with advantages in terms of cost optimization, and power consumption; (iii) The congestion characterization of target applications and proposals to reduce this congestion by the optimization of collective communication primitives, injection throttling and adaptive routing; and (iv) the low-latency high-message rate endpoint functions and their connection with new open technologies.

Thèse de Doctorat de l'Université Paris-Saclay, 2024

abstract

Abstract

Le Commissariat à l'Énergie Atomique et aux Énergies Alternatives (CEA) s'intéresse à la simulation d'écoulements fluides en régime supersonique et hypersonique dans le cadre de la rentrée atmosphérique. Pour ce faire, un code de simulation numérique dédié y est développé. Pour répondre à des contraintes fortes, ce code ne prend en entrée que des maillages hexaédriques structurés par blocs. Ce type de maillage est compliqué à générer, c'est le plus souvent réalisé à la main via l'utilisation de logiciels interactifs dédiés. Pour des géométries industrielles complexes, la génération d'un maillage est très couteuse en temps. A l'heure actuelle, la génération automatique de maillages hexaédriques est un sujet de recherche ouvert et complexe.Dans le cadre de ces travaux de thèse, nous proposons une méthode permettant de générer des maillages structurés par blocs courbes de domaines fluides autour de géométries dédiées pour les problématiques visées. Cette méthode a d'abord été prototypée dans le cadre de domaines 2D, puis étendue au cas 3D. Ici, la méthode est présentée dans le cas général, en dimension n. Elle se découpe en plusieurs étapes qui sont les suivantes.Dans un premier temps, une structure de blocs linéaire est obtenue par extrusion d'une première discrétisation de la paroi. Ces travaux sont une extension des travaux proposés par Ruiz-Girones et al.. Une fois cette structure de blocs linéaire obtenue, nous proposons deux manières distinctes de courber les blocs afin d'améliorer la représentation de la géométrie, et de limiter le lissage sur le maillage final. La première est à travers d'un processus de lissage de maillage à topologie fixe à l'aide d'un problème d'optimisation, auquel un terme de pénalité est ajouté pour aligner certaines arêtes du maillage aux interfaces. Dans notre processus, nous appliquons cette méthode de lissage à la structure de blocs pour l'aligner sur la surface du véhicule. Cette méthode étant pour l'instant trop couteuse en temps de calcul dans le cas 3D, nous proposons une seconde manière de courber les blocs, à travers une représentation à l'aide de courbes polynomiales de Béziers. Nous appliquons cette fois des opérations géométriques et locales afin d'aligner les blocs à la géométrie.Enfin, en partant du principe que les blocs sont représentés à l'aide de courbes de Bézier, nous générons un maillage final sur ces blocs courbes sous différentes contraintes. Finalement, nous évaluons la qualité des maillages générés à travers des critères purement géométriques, en étudiant l'impact des différents paramètres de notre méthode sur le maillage final. Nous évaluons également les maillages générés par la simulation d'écoulements fluides sur ces maillages, avec la comparaison à des données expérimentales, analytiques, ainsi qu'à des calculs de référence.

2023

SIAM International Meshing Roundtable 2023, Springer Nature Switzerland, p. 43-63, 2023

abstract

Abstract

This paper presents Coupe, a mesh partitioning platform. It provides solutions to solve different variants of the mesh partitioning problem, mainly in the context of load-balancing parallel mesh-based applications. From partitioning weights ensuring balance to topological partitioning that minimizes communication metrics through geometric methods, Coupe offers a large panel of algorithms to fit user-specific problems. Coupe exploits shared memory parallelism, is written in Rust, and consists of an open-source library and command line tools. Experimenting with different algorithms and parameters is easy. The code is available on Github.

Euro-Par 2022 International Workshops, Glasgow, UK, August 22–26, 2022, Revised Selected Papers, Glasgow, United Kingdom, 2023

abstract

Abstract

Mesh partitioning used for load balancing in distributed numerical simulations is typically managed with tools that are good enough but not optimal. Their use scope is not explicitly dedicated to load balancing, and they cannot make use of all available information. In this paper, the mesh partitioning problem and the context for its use are precisely defined. Then, existing tools are presented, along with their characteristics and features that are missing. Finally, a new partitioning platform – the subject of my PhD thesis – is presented: its architecture, software engineering choices made along the way, and how it can be the best fit for load balancing distributed simulations. The platform is open-source and is hosted on GitHub: https://github.com/LIHPC-Computational-Geometry/coupe .

IEEE International Conference on Quantum Computing and Engineering, 2023

abstract

Abstract

Quantum computers exploit the particular behavior of quantum physical systems to solve some problems in a different way than classical computers. We are now approaching the point where quantum computing could provide real advantages over classical methods. The computational capabilities of quantum systems will soon be available in future supercomputer architectures as hardware accelerators called Quantum Processing Units (QPU). From optimizing compilers to task scheduling, the High-Performance Computing (HPC) software stack could benefit from the advantages of quantum computing. We look here at the problem of register allocation, a crucial part of modern optimizing compilers. We propose a simple proof-of-concept hybrid quantum algorithm based on QAOA to solve this problem. We implement the algorithm and integrate it directly into GCC, a well-known modern compiler. The performance of the algorithm is evaluated against the simple Chaitin-Briggs heuristic as well as GCC's register allocator. While our proposed algorithm lags behind GCC's modern heuristics, it is a good first step in the design of useful quantum algorithms for the classical HPC software stack.

Communications in Computational Physics, 2023

abstract

Abstract

The DDFV (Discrete Duality Finite Volume) method is a finite volume scheme mainly dedicated to diffusion problems, with some outstanding properties. This scheme has been found to be one of the most accurate finite volume methods for diffusion problems. In the present paper, we propose a new monotonic extension of DDFV, which can handle discontinuous tensorial diffusion coefficient. Moreover, we compare its performance to a diamond type method with an original interpolation method relying on polynomial reconstructions. Monotonicity is achieved by adapting the method of Gao et al [A finite volume element scheme with a monotonicity correction for anisotropic diffusion problems on general quadrilateral meshes] to our schemes. Such a technique does not require the positiveness of the secondary unknowns. We show that the two new methods are second-order accurate and are indeed monotonic on some challenging benchmarks as a Fokker-Planck problem.

Computational & Applied Mathematics, vol 42, 2023

abstract

Abstract

When solving numerically an elliptic problem, it is important in most applications that the scheme used preserves the positivity of the solution. When using finite volume schemes on deformed meshes, the question has been solved rather recently. Such schemes are usually (at most) second-order convergent, and non-linear. On the other hand, many high-order schemes have been proposed that do not ensure positivity of the solution. In this paper, we propose a very high-order monotonic (that is, positivity preserving) numerical method for elliptic problems in 1D. We prove that this method converges to an arbitrary order (under reasonable assumptions on the mesh) and is indeed monotonic. We also show how to handle discontinuous sources or diffusion coefficients, while keeping the order of convergence. We assess the new scheme, on several test problems, with arbitrary (regular, distorted, and random) meshes.

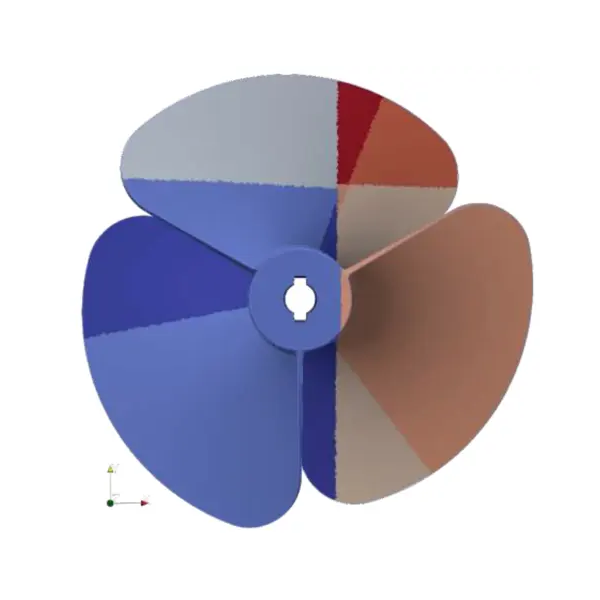

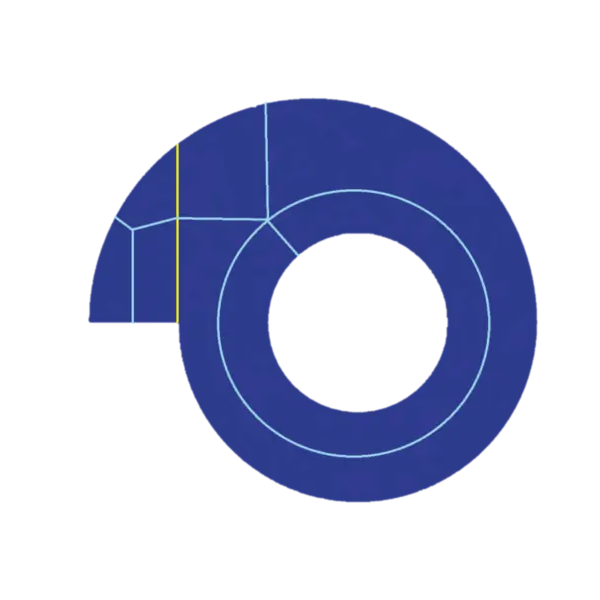

Abstract

Quad meshing is a very well-studied domain for many years. Although the problem can generally be considered solved, many approaches do not provide adequate inputs for Computational Fluid Dynamics (CFD) and, in our case, hypersonic flow simulations. Such simulations require very strong monitoring of cell size and direction. To our knowledge, engineers do this manually with the help of interactive software. In this work we propose an automatic algorithm to generate full quadrilateral block structured mesh for the purpose of hypersonic flow simulation. Using this approach we can handle some simulation input like the angle of attack and the boundary layer definition. We will present here 2D results of computation on a hypersonic vehicle using the meshes generated by our method.

SIAM CSE 2023 - SIAM Conference on Computational Science and Engineering, 2023

abstract

Abstract

Heterogeneous supercomputers with GPUs are one of the best candidates to build Exascale machines. However, porting scientific applications with millions of lines of code lines is challenging. Data transfers/locality and exposing enough parallelism determine the maximum achievable performance on such systems. Thus porting efforts impose developers to rewrite parts of the application which is tedious and time-consuming and does not guarantee performances in all the cases. Being able to detect which parts can be expected to deliver performance gains on GPUs is therefore a major asset for developers. Moreover, task parallel programming model is a promising alternative to expose enough parallelism while allowing asynchronous execution between CPU and GPU. OpenMP 4.5 introduces the « target » directive to offload computation on GPU in a portable way. Target constructions are considered as explicit OpenMP task in the same way as for CPU but executed on GPU. In this work, we propose a methodology to detect the most profitable loops of an application that can be ported on GPU. While we have applied the detection part on several mini applications (LULESH, miniFE, XSBench and Quicksilver), we experimented the full methodology on LULESH through MPI+OpenMP task programming model with target directives. It relies on runtime modifications to enable overlapping of data transfers and kernel execution through tasks. This work has been integrated into the MPC framework, and has been validated on distributed heterogeneous system.

52nd International Conference on Parallel Processing (ICPP 2023), 2023

abstract

Abstract

The architecture of supercomputers is evolving to expose massive parallelism. MPI and OpenMP are widely used in application codes on the largest supercomputers in the world. The community primarily focused on composing MPI with OpenMP before its version 3.0 introduced task-based programming. Recent advances in OpenMP task model and its interoperability with MPI enabled fine model composition and seamless support for asynchrony. Yet, OpenMP tasking overheads limit the gain of task-based applications over their historical loop parallelization (parallel for construct). This paper identifies the OpenMP task dependency graph discovery speed as a limiting factor in the performance of task-based applications. We study its impact on intra and inter-node performances over two benchmarks (Cholesky, HPCG) and a proxy-application (LULESH). We evaluate the performance impacts of several discovery optimizations, and introduce a persistent task dependency graph reducing overheads by a factor up to 15 at run-time. We measure 2x speedup over parallel for versions weak scaled to 16K cores, due to improved cache memory use and communication overlap, enabled by task refinement and depth-first scheduling.

IWOMP 23 - International Workshop on OpenMP, 2023

abstract

Abstract

Many-core and heterogeneous architectures now require programmers to compose multiple asynchronous programming model to fully exploit hardware capabilities. As a shared-memory parallel programming model, OpenMP has the responsibility of orchestrating the suspension and progression of asynchronous operations occurring on a compute node, such as MPI communications or CUDA/HIP streams. Yet, specifications only come with the task detach(event) API to suspend tasks until an asynchronous operation is completed, which presents a few drawbacks. In this paper, we introduce the design and implementation of an extension on the taskwait construct to suspend a task until an asynchronous event completion. It aims to reduce runtime costs induced by the current solution, and to provide a standard API to automate portable task suspension solutions. The results show twice less overheads compared to the existing task detach clause.

Abstract

High-Performance Computing (HPC) is currently facing significant challenges. The hardware pressure has become increasingly difficult to manage due to the lack of parallel abstractions in applications. As a result, parallel programs must undergo drastic evolution to effectively exploit underlying hardware parallelism. Failure to do so results in inefficient code. In this pressing environment, parallel runtimes play a critical role, and their esting becomes crucial. This paper focuses on the MPI interface and leverages the MPI binding tools to develop a multi-language test-suite for MPI. By doing so and building on previous work from the Forum’s document editors, we implement a systematic testing of MPI symbols in the context of the Parallel Computing Validation System (PCVS), which is an HPC validation platform dedicated to running and managing test-suites at scale. We first describe PCVS, then outline the process of generating the MPI API test suite, and finally, run these tests at scale. All data sets, code generators, and implementations are made available in open-source to the community. We also set up a dedicated website showcasing the results, which self-updates thanks to the Spack package manager.

ISC High Performance 2023: High Performance Computing pp 28–41, 2023

abstract

Abstract

The field of High-Performance Computing is rapidly evolving, driven by the race for computing power and the emergence of new architectures. Despite these changes, the process of launching programs has remained largely unchanged, even with the rise of hybridization and accelerators. However, there is a need to express more complex deployments for parallel applications to enable more efficient use of these machines. In this paper, we propose a transparent way to express malleability within MPI applications. This process relies on MPI process virtualization, facilitated by a dedicated privatizing compiler and a user-level scheduler. With this framework, using the MPC thread-based MPI context, we demonstrate how code can mold its resources without any software changes, opening the door to transparent MPI malleability. After detailing the implementation and associated interface, we present performance results on representative applications.

Abstract

MPI is the most widely used interface for high-performance computing (HPC) workloads. Its success lies in its embrace of libraries and ability to evolve while maintaining backward compatibility for older codes, enabling them to run on new architectures for many years. In this paper, we propose a new level of MPI compatibility: a standard Application Binary Interface (ABI). We review the history of MPI implementation ABIs, identify the constraints from the MPI standard and ISO C, and summarize recent efforts to develop a standard ABI for MPI. We provide the current proposal from the MPI Forum’s ABI working group, which has been prototyped both within MPICH and as an independent abstraction layer called Mukautuva. We also list several use cases that would benefit from the definition of an ABI while outlining the remaining constraints.

Abstract

The coupling through both drag force and volume fraction (of gas) of a kinetic equation of Vlasov type and a system of Euler or Navier–Stokes type (in which the volume fraction explicity appears) leads to the so-called thick sprays equations. Those equations are used to describe sprays (droplets or dust specks in a surrounding gas) in which the volume fraction of the disperse phase is non negligible. As for other multiphase flows systems, the issues related to the linear stability around homogeneous solutions is important for the applications. We show in this paper that this stability indeed holds for thick sprays equations, under physically reasonable assumptions. The analysis which is performed makes use of Lyapunov functionals for the linearized equations.

ACM Transactions on Mathematical Software, Volume 48, Issue 4, 2023

abstract

Abstract

Floating-point numbers represent only a subset of real numbers. As such, floating-point arithmetic introduces approximations that can compound and have a significant impact on numerical simulations. We introduce encapsulated error, a new way to estimate the numerical error of an application and provide a reference implementation, the Shaman library. Our method uses dedicated arithmetic over a type that encapsulates both the result the user would have had with the original computation and an approximation of its numerical error. We thus can measure the number of significant digits of any result or intermediate result in a simulation. We show that this approach, although simple, gives results competitive with state-of-the-art methods. It has a smaller overhead, and it is compatible with parallelism, making it suitable for the study of large-scale applications.

Abstract

The exploitation of urban-material spectral properties is of increasing importance for a broad range of applications, such as urban climate-change modeling and mitigation or specific/dangerous roof-material detection and inventory. A new spectral library dedicated to the detection of roof material was created to reflect the regional diversity of materials employed in Wallonia, Belgium. The Walloon Roof Material (WaRM) spectral library accounts for 26 roof material spectra in the spectral range 350–2500 nm. Spectra were acquired using an ASD FieldSpec3 Hi-Res spectrometer in laboratory conditions, using a spectral sampling interval of 1 nm. The analysis of the spectra shows that spectral signatures are strongly influenced by the color of the roof materials, at least in the VIS spectral range. The SWIR spectral range is in general more relevant to distinguishing the different types of material. Exceptions are the similar properties and very close spectra of several black materials, meaning that their spectral signatures are not sufficiently different to distinguish them from each other. Although building materials can vary regionally due to different available construction materials, the WaRM spectral library can certainly be used for wider applications; Wallonia has always been strongly connected to the surrounding regions and has always encountered climatic conditions similar to all of Northwest Europe.

International Meshing Roundtable, 2023

abstract

Abstract

Quad meshing is a very well-studied domain for many years. While the problem can be globally considered as solved, many approaches do not provide suitable inputs for Computational Fluid Dynamics (CFD) and in our case for supersonic flow simulations. Such simulations require a very strong control on the cell size and direction. To our knowledge, engineers ensure this control manually using interactive software. In this work we propose an automatic algorithm to generate full quadrilateral block structured mesh for the purpose of supersonic flow simulation. We handle some simulation input like the angle of attack and the boundary layer definition. Our approach generates adequate 2D meshes and is designed to be extensible in 3D.

Thèse de Doctorat de l'Université Paris-Saclay, 2023

abstract

Abstract

Cette étude s'inscrit dans le domaine de l'optimisation de performances de simulations numériques distribuées à grande échelle à base de maillages. Dans ce domaine, nous nous intéressons au bon équilibre de charge entre les unités de calcul sur lesquelles la simulation s'exécute. Pour équilibrer la charge d'une simulation à base de maillage, il faut généralement prendre en compte de la quantité de calcul nécessaire pour chaque maille, ainsi que la quantité de données qui doivent être transférées entre les unités de calcul. Les outils communément utilisés pour résoudre ce problème le solvent d'une manière, qui n'est pas forcément optimale pour une simulation donnée, car ils s'appliquent à de nombreux cas autres que l'équilibrage de charge et le partitionnement de maillage. Notre étude consiste à concevoir et implémenter un nouvel outil de partitionnement dédié aux maillages et à l'équilibrage de charge. Après une explication approfondie du contexte de l'étude, des problèmes de partitionnement ainsi que de l'état de l'art des algorithmes de partitionnement, nous montrons l'intérêt de chaîner des algorithmes pour optimiser de différentes façon une partition de maillage. Ensuite, nous étoffons cette méthode de chaînage en deux points: d'abord, en étendant l'algorithme de partitionnement de nombres VNBest pour l'équilibrage de charge où les unités de calcul sont hétérogènes, puis en spécialisant l'algorithme de partitionnement géométrique RCB, pour améliorer ses performances sur les maillages cartésiens. Nous décrivons en détails le processus de conception de notre outil de partitionnement, qui fonctionne exclusivement en mémoire partagée. Nous montrons notre outil peut obtenir des partitions avec un meilleur équilibre de charge que deux outils de partitionnement en mémoire partagée existants, Scotch et Metis. Cependant, nous ne minimisons pas aussi bien les transferts de données entre unités de calcul. Nous présentons les caractéristiques de performance des algorithmes implémentés en *multithread*.

SIAM International Meshing Roundtable, 2023

abstract

Abstract

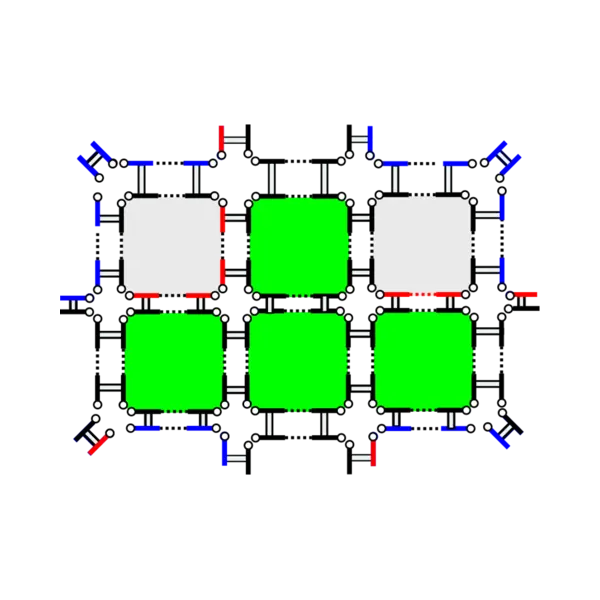

Nowadays for real study cases, the generation of full block structured hexahedral meshes is mainly an interactive and very-time consuming process realized by highly-qualified engineers. To this purpose, they use interactive software where they handle and modify complex block structures with operations like block removal, block insertion, O-grid insertion, propagation of block splitting, propagation of meshing parameters along layers of blocks and so on. Such operations are error-prone and modifying or adding an operation is a very tedious work. In this work, we propose to formally define hexahedral block structures and main associated operations in the model of n-dimensional generalized map. This model provides topological invariant and a systematic handling of geometric data that allows us to ensure the expected robustness.

SLE '23: 16th ACM SIGPLAN International Conference on Software Language Engineering, 2023

abstract

Abstract

Software languages have pros and cons, and are usually chosen accordingly. In this context, it is common to involve different languages in the development of complex systems, each one specifically tailored for a given concern. However, these languages create de facto silos, and offer little support for interoperability with other languages, be it statically or at runtime. In this paper, we report on our experiment on extracting a relevant behavioral interface from an existing language, and using it to enable interoperability at runtime. In particular, we present a systematic approach to define the behavioral interface and we discuss the expertise required to define it. We illustrate our work on the case study of SciHook, a C++ library enabling the runtime instrumentation of scientific software in Python. We present how the proposed approach, combined with SciHook, enables interoperability between Python and a domain-specific language dedicated to numerical analysis, namely NabLab, and discuss overhead at runtime.

Thèse de Doctorat de l'Université Paris Cité, 2023

abstract

Abstract

The objective of this thesis is the development and the analysis of robust and accurate finite volume schemes for the approximation of the solution of the diffusion equation on deformed meshes with diffusion coefficient which can be anisotropic and/or discontinuous. To satisfy these properties, our schemes must preserve the positivity and achieve high-order accuracy. In this manuscript, we propose the first positivity-preserving arbitrary-order scheme for diffusion. Our approach is first to study the problem in 1D. In such a case, the positivity problem only appears for order 3 and higher. The 1D setting allows us to perform the mathematical analysis of this problem, including a proof of convergence of the scheme to an arbitrary order under a stability assumption. We then extend it to 2D at order 2, relying on well-known schemes. We study two possibilities: a DDFV-type scheme (Discrete Duality Finite Volume), which we compare with a method using polynomial reconstruction. Finally, this allows us to develop a monotonic scheme of arbitrary order on any mesh with a kappa diffusion coefficient that can be discontinuous and/or anisotropic. Improving the order is achieved through polynomial reconstruction, and monotonicity is obtained by reducing to a M-matrix structure, which gives nonlinear schemes. Each scheme is validated by numerical simulations showing the order of convergence and the positivity of the solution obtained.

Thèse de Doctorat de l'Université Paris-Saclay, 2023

abstract

Abstract

Cette thèse présente NFS-Ganesha, un serveur NFS en espace utilisateur pour le HPC, et ses évolutions depuis sa création à l'aube des années 2000 jusqu'à la période Exascale actuelle. Créé à l'origine pour des besoins opérationnels liés à l'exploitation des grands systèmes de stockage, NFS-Ganesha a été pensé pour être générique et parallélisé. L'apparition conjointe des systèmes de fichiers parallèles, donnant naissance aux architectures «data-centriques» de centre de calcul, et celle du protocole NFSv4 vont faire évoluer de NFS-Ganesha qui va devenir un serveur NFS générique capable de s'interfacer avec de nombreux backends. L'évolution de NFSv4, sous la forme de NFSv4.1 et du protocole pNFS, fera de NFS-Ganesha un standard adopté par une forte communauté open-source impliquant chercheurs et industriels. NFS-Ganesha sera utilisé pour réaliser la fonctionnalité IO-Proxy, et la création de nouveaux protocoles parallèles afférents. Impliqués dans des projets de R&D européens, NFS-Ganesha servira à implémenter la fonctionnalité de serveur éphémère afin de répondre aux exigences de l'Exascale.

SC-W '23: Proceedings of the SC '23 Workshops of The International Conference on High Performance Computing, Network, Storage, and Analysis, 2023

abstract

Abstract

HPC application developers and administrators need to understand the complex interplay between compute clusters and storage systems to make effective optimization decisions. Ad hoc investigations of this interplay based on isolated case studies can lead to conclusions that are incorrect or difficult to generalize. The I/O Trace Initiative aims to improve the scientific community’s understanding of I/O operations by building a searchable collaborative archive of I/O traces from a wide range of applications and machines, with a focus on high-performance computing and scalable AI/ML. This initiative advances the accessibility of I/O trace data by enabling users to locate and compare traces based on user-specified criteria. It also provides a visual analytics platform for in-depth analysis, paving the way for the development of advanced performance optimization techniques. By acting as a hub for trace data, the initiative fosters collaborative research by encouraging data sharing and collective learning.

Journal of Computational Physics, Volume 478, 1 April 2023, 2023

abstract

Abstract

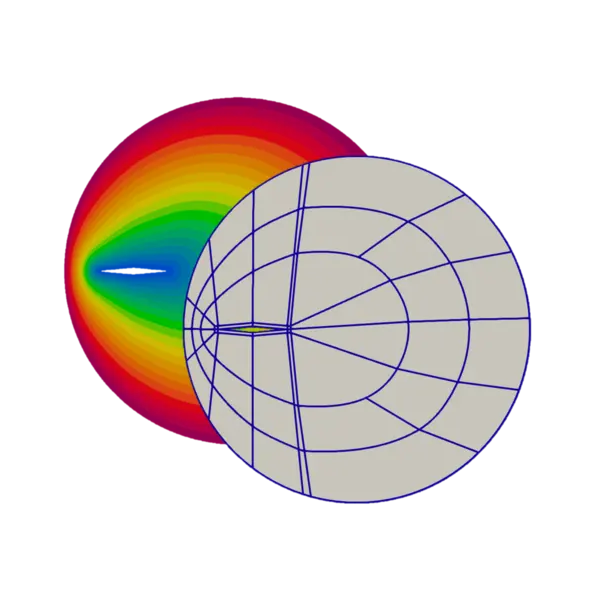

In this paper, we propose an adaptive mesh refinement method for 2D multi-material compressible non-viscous flows in semi-Lagrangian coordinates. The mesh adaptation procedure is local and relies on a discrete metric field evaluation. The remapping method is second-order accurate and we prove its stability. We propose a multi-material treatment using two ingredients: the local remeshing is performed in a way that reduces as much as possible the creation of mixed cells and an interface reconstruction method that can be used to avoid the diffusion of the material interfaces. The obtained method is almost Lagrangian and can be implemented in a parallel framework. We provide some numerical tests which attest the validity of the method and its robustness.

ESAIM M2AN Volume 57, Number 2, March-April 2023, 2023

abstract

Abstract

We construct an original framework based on convex analysis to prove the existence and uniqueness of a solution to a class of implicit numerical schemes. We propose an application of this general framework in the case of a new non linear implicit scheme for the 1D Lagrangian gas dynamics equations. We provide numerical illustrations that corroborate our proof of unconditional stability for this non linear implicit scheme.

2022

Proceedings of SBAC-PAD 2022, IEEE, 2022

abstract

Abstract

HPC systems have experienced significant growth over the past years, with modern machines having hundreds of thousands of nodes. Message Passing Interface (MPI) is the de facto standard for distributed computing on these architectures. On the MPI critical path, the message-matching process is one of the most time-consuming operations. In this process, searching for a specific request in a message queue represents a significant part of the communication latency. So far, no miracle algorithm performs well in all cases. This paper explores potential matching specializations thanks to hints introduced in the latest MPI 4.0 standard. We propose a hash-table-based algorithm that performs constant time message-matching for no wildcard requests. This approach is suitable for intensive point-to-point communication phases in many applications (more than 50% of CORAL benchmarks). We demonstrate that our approach can improve the overall execution time of real HPC applications by up to 25%. Also, we analyze the limitations of our method and propose a strategy for identifying the most suitable algorithm for a given application. Indeed, we apply machine learning techniques for classifying applications depending on their message pattern characteristics.

Concurr. Comput. Pract. Exp., 2022

abstract

Abstract

By allowing computation/communication overlap, MPI nonblocking collectives (NBC) are supposed to improve application scalability and performance. However, it is known that to actually get overlap, the MPI library has to implement progression mechanisms in software or rely on the network hardware. These mechanisms may be present or not, adequate or perfectible, they may have an impact on communication performance or may interfere with computation by stealing CPU cycles. From a user point of view, assessing and understanding the behavior of an MPI library concerning computation/communication overlap is difficult. In this article, we propose a methodology to assess the computation/communication overlap of NBC. We propose new metrics to measure how much communication and computation do overlap, and to evaluate how they interfere with each other. We integrate these metrics into a complete methodology. We compare our methodology with state of the art metrics and benchmarks, and show that ours provides more meaningful informations. We perform experiments on a large panel of MPI implementations and network hardware and show when and why overlap is efficient, nonexistent or even degrades performance.

Abstract

The ablation of a vehicle during atmospheric reentry leads to a degradation of its surface condition. Ablated wall interacts with the boundary layer that develops around the object. The deformation can be seen as a ripple or a roughness pattern with different characteristic amplitudes and wavelenghts. The effect of this defect on the flow is taken into account either by means of modelizations or by direct simulation by applying the strains to the mesh. Mesh adaptation techniques can be used in order to take into account wall deformations during a simulation. The principle is to start from an initially smooth mesh, to apply a strain law, then to use regularization and refinement methods. The meshes will be adapted for use in a parallel CFD Navier-Stokes code. A refinement of the mesh close to the wall is required to correctly capture the boundary layer [2], but also to accuratly represent the geometry of the wall deformation. For the numerical methods used, a constraint of orthogonality is added to the mesh impining on the wall. The developments are for the moments, carried out in an independent external tool. The regularization methods are compared on results of simulations with different meshes. The method can be easily coupled with a CFD code and can be extended to 3D geometries.

22nd IEEE International Symposium on Cluster, Cloud and Internet Computing, CCGrid 2022, Taormina, Italy, May 16-19, 2022, IEEE, p. 736-746, 2022

abstract

Abstract

Overlapping communications with computation is an efficient way to amortize the cost of communications of an HPC application. To do so, it is possible to utilize MPI nonblocking primitives so that communications run in back-ground alongside computation. However, these mechanisms rely on communications actually making progress in the background, which may not be true for all MPI libraries. Some MPI libraries leverage a core dedicated to communications to ensure communication progression. However, taking a core away from the application for such purpose may have a negative impact on the overall execution time. It may be difficult to know when such dedicated core is actually helpful. In this paper, we propose a model for the performance of applications using MPI nonblocking primitives running on top of an MPI library with a dedicated core for communications. This model is used to understand the compromise between computation slowdown due to the communication core not being available for computation, and the communication speed-up thanks to the dedicated core; evaluate whether nonblocking communication is actually obtaining the expected performance in the context of the given application; predict the performance of a given application if ran with a dedicated core. We describe the performance model and evaluate it on different applications. We compare the predictions of the model with actual executions.

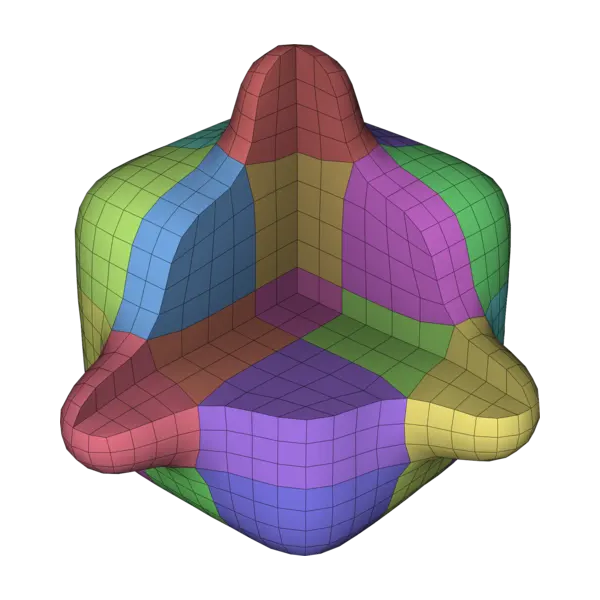

Abstract

Polycube-maps are used as base-complexes in various fields of computational geometry, including the generation of regular all-hexahedral meshes free of internal singularities. However, the strict alignment constraints behind polycube-based methods make their computation challenging for CAD models used in numerical simulation via finite element method (FEM). We propose a novel approach based on an evolutionary algorithm to robustly compute polycube-maps in this context. We address the labelling problem, which aims to precompute polycube alignment by assigning one of the base axes to each boundary face on the input. Previous research has described ways to initialize and improve a labelling via greedy local fixes. However, such algorithms lack robustness and often converge to inaccurate solutions for complex geometries. Our proposed framework alleviates this issue by embedding labelling operations in an evolutionary heuristic, defining fitness, crossover, and mutations in the context of labelling optimization. We evaluate our method on a thousand smooth and CAD meshes, showing Evocube converges to accurate labellings on a wide range of shapes. The limitations of our method are also discussed thoroughly.

Abstract

An important part of recent advances in hexahedral meshing focuses on the deformation of a domain into a polycube; the polycube deformed by the inverse map fills the domain with a hexahedral mesh. These methods are appreciated because they generate highly regular meshes. In this paper we address a robustness issue that systematically occurs when a coarse mesh is desired: algorithms produce deformations that are not one-to-one, leading to collapse of large portions of the model when trying to apply the (undefined) inverse map. The origin of the problem is that the deformation requires to perform a mixed integer optimization, where the difficulty to enforce the integer constraints is directly tied to the expected coarseness. Our solution is to introduce sanity constraints preventing the loss of bijectivity due to the integer constraints.

Abstract

In this article, we provide a detailed survey of techniques for hexahedral mesh generation. We cover the whole spectrum of alternative approaches to mesh generation, as well as post-processing algorithms for connectivity editing and mesh optimization. For each technique, we highlight capabilities and limitations, also pointing out the associated unsolved challenges. Recent relaxed approaches, aiming to generate not pure-hex but hex-dominant meshes, are also discussed. The required background, pertaining to geometrical as well as combinatorial aspects, is introduced along the way.

Abstract

Abstract HexMe consists of 189 tetrahedral meshes with tagged features and a workflow to generate them. The primary purpose of HexMe meshes is to enable consistent and practically meaningful evaluation of hexahedral meshing algorithms and related techniques, specifically regarding the correct meshing of specified feature points, curves, and surfaces. The tetrahedral meshes have been generated with Gmsh, starting from 63 computer-aided design (CAD) models from various databases. To highlight and label the diverse and challenging aspects of hexahedral mesh generation, the CAD models are classified into three categories: simple, nasty, and industrial. For each CAD model, we provide three kinds of tetrahedral meshes (uniform, curvature-adapted, and box-embedded). The mesh generation pipeline is defined with the help of Snakemake, a modern workflow management system, which allows us to specify a fully automated, extensible, and sustainable workflow. It is possible to download the whole dataset or select individual meshes by browsing the online catalog. The HexMe dataset is built with evolution in mind and prepared for future developments. A public GitHub repository hosts the HexMe workflow, where external contributions and future releases are possible and encouraged. We demonstrate the value of HexMe by exploring the robustness limitations of state-of-the-art frame-field-based hexahedral meshing algorithm. Only for 19 of 189 tagged tetrahedral inputs all feature entities are meshed correctly, while the average success rates are 70.9% / 48.5% / 34.6% for feature points/curves/surfaces.

IWOMP 2022 - 18th International Workshop on OpenMP, p. 1-14, 2022

abstract

Abstract

Heterogeneous supercomputers are widespread over HPC systems and programming efficient applications on these architectures is a challenge. Task-based programming models are a promising way to tackle this challenge. Since OpenMP 4.0 and 4.5, the target directives enable to offload pieces of code to GPUs and to express it as tasks with dependencies. Therefore, heterogeneous machines can be programmed using MPI+OpenMP(task+target) to exhibit a very high level of concurrent asynchronous operations for which data transfers, kernel executions, communications and CPU computations can be overlapped. Hence, it is possible to suspend tasks performing these asynchronous operations on the CPUs and to overlap their completion with another task execution. Suspended tasks can resume once the associated asynchronous event is completed in an opportunistic way at every scheduling point. We have integrated this feature into the MPC framework and validated it on a AXPY microbenchmark and evaluated on a MPI+OpenMP(tasks) implementation of the LULESH proxy applications. The results show that we are able to improve asynchronism and the overall HPC performance, allowing applications to benefit from asynchronous execution on heterogeneous machines.

Mesh Generation and Adaptation: Cutting-Edge Techniques, Springer International Publishing, p. 69-94, 2022

abstract

Abstract

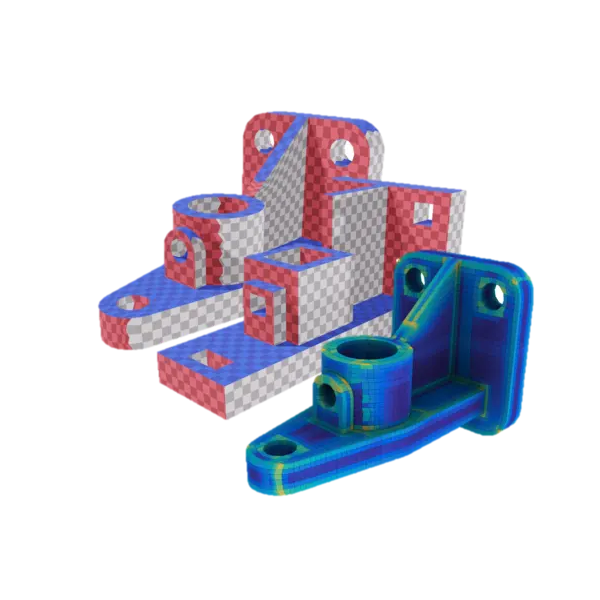

In this chapter, we deal with the problem of mesh conversion for coupling lagrangian and eulerian simulation codes. More specifically, we focus on hexahedral meshes, which are known as pretty difficult to generate and handle. Starting from an eulerian hexahedral mesh, i.e. a hexahedral mesh where each cell contains several materials, we provide a full-automatic process that generates a lagrangian hexahedral mesh, i.e. a hexahedral mesh where each cell contains a single material. This process is simulation-driven in the meaning that the we guarantee that the generated mesh can be used by a simulation code (minimal quality for individual cells), and we try and preserve the volume and location of each material as best as possible. In other words, the obtained lagrangian mesh fits the input eulerian mesh with high-fidelity. To do it, we interleave several advanced meshing treatments--mesh smoothing, mesh refinement, sheet insertion, discrete material reconstruction, discrepancy computation, in a fully integrated pipeline. Our solution is evaluated on 2D and 3D examples representative of CFD simulation (Computational Fluid Dynamics).

Thèse de Doctorat de l'Université de Paris-Saclay, 2022

abstract

Abstract

Les codes de simulation numérique reposant sur des méthodes de type éléments et volumes finis requièrent de discrétiser le domaine étudié – par exemple une pièce mécanique telle qu’un moteur, une aile d’avion, une turbine, etc. – à l’aide d’un maillage. En dimension 3, un maillage est un ensemble composé d’éléments volumique simples, le plus souvent des tétraèdres ou des hexaèdres, qui partitionnent le domaine d’étude. Le choix de tétraèdres ou d’hexaèdres est principalement dicté par l’application (interaction fluide-structure, hydrodynamique, etc.). Si la génération automatique de maillages tétraédriques est un processus relativement maîtrisé aujourd’hui, générer des maillages hexaédriques est toujours un problème ouvert. Ceci est problématique pour les applications qui justement nécessitent impérativement des maillages hexaédriques puisque leur génération se fait de façon semi-automatique, ce qui peut prendre plusieurs semaines à plusieurs mois de temps ingénieur ! Alors que le temps consacré au processus de simulation numérique à proprement parler tend à diminuer du fait de la puissance des machines utilisées, le goulot d’étranglement est désormais dans la préparation des données, à savoir obtenir un modèle de CAO adapté au calcul, puis en générer un maillage.C’est dans ce contexte que s’inscrit la thèse proposée en suivant une approche hybride mêlant :1. Le développement d’algorithmes (semi)-automatiques pour générer et modifier des maillages hexaédriques structurés par blocs ;2. La mise en place d’un logiciel graphique interactif dédié à la manipulation de structures de blocs. Les mécanismes d’interaction seront en outre utilisés pour guider les algorithmes dans leurs prises de décision, que ce soit à l’initialisation (critères à apposer sur des entités particulières de CAO) ou en cours d’algorithme (décision entre plusieurs options sur lesquelles l’algorithme ne peut se prononcer seul).L’objectif de cette thèse n’est donc pas de fournir une solution automatique universelle, ce qui semble inatteignable actuellement, mais plutôt de réduire le temps ingénieur consacré à la génération du maillage en fournissant des outils plus adaptés. Dans cette optique, nous proposons de placer l’étude dans le prolongement de [LED10, KOW12, GAO15, GAO17], où est considéré le problème de simplification et d’enrichissement de maillages hexaédriques par insertion et suppression de couches de mailles. Dans tous ces travaux, les algorithmes proposés sont des algorithmes simples de type « glouton » où le maillage est modifié pas à pas pour converger vers une solution finale Ef : A chaque étape Ei, on fait l’hypothèse que la « meilleure » solution Ef sera obtenue en faisant le choix « optimal » pour Ei. Or en recherche opérationnelle, une telle approche est connue comme perfectible dès lors que le problème d’optimisation traité est non linéaire. L’idée est donc d’utiliser des approches usuelles en recherche opérationnelle et plus spécifiquement des systèmes multi-agents, couplées à des outils interactifs, pour permettre la génération de structures de blocs sur des CA0 complexes.

Thèse de Doctorat de l'Université de Bordeaux, 2022

abstract

Abstract

De nos jours, MPI est de facto le standard pour la programmation à mémoire distribuée pour les supercalculateurs. Les communications non bloquantes sont un des modèles proposés par le standard MPI. Ces opérations peuvent être utilisées pour recouvrir les communications avec du calcul (ou d’autres communications) afin d’amortir leurs coûts. Cependant, pour être utilisées efficacement, ces opérations nécessitent une progression asynchrone pouvant régulièrement utiliser un montant non négligeable de ressources de calcul (particulièrement les collectives non bloquantes). De plus, partager les ressources de calcul avec l’application peut provoquer un ralentissement global. Les mécanismes utilisés pour cette progression asynchrone parviennent difficilement à concilier un bon recouvrement en gardant un impact minimal sur l’application, ce qui raréfie leur utilisation. Afin de résoudre ces différents problèmes, nous avons suivi plusieurs étapes. Premièrement, nous proposons une étude approfondie de la progression asynchrone dans les implémentations MPI, en utilisant de nouvelles métriques se concentrant sur l’évaluation des mécanismes de progression et de leur impact sur le système global. Après avoir exposé les faiblesses de ces implémentations MPI, nous proposons une nouvelle solution pour la progression des collectives non bloquantes en utilisant des coeurs dédiés combinés à des algorithmes de collectives basés sur des évènements. Nous avons mesuré l’efficacité de cette solution en utilisant nos métriques, pour nous comparer avec les implémentations MPI étudiées dans la première étape. Enfin, nous avons développé un modèle permettant de prédire le gain potentiel et le surcout induit par l’utilisation d’opérations non bloquantes avec des coeurs dédiés. Ce modèle peut être utilisé pour évaluer l’utilité de transformer une application basée sur des opérations bloquantes en opérations non bloquantes pour bénéficier du recouvrement. Nous évaluons ce modèle sur plusieurs benchmarks.

Computing in Science & Engineering ( Volume: 24, Issue: 4, 01 July-Aug. 2022), 2022

abstract

Abstract

Scientific codes are complex software systems. Their engineering involves various stakeholders using various computer languages for defining artifacts at different abstraction levels and for different purposes. In this article, we review the overall processes leading to the development of scientific software, and discuss the role of computer languages in the definition of the different artifacts. We provide guidelines to make informed decisions when the time comes to choose a computer language to develop scientific software.

EuroVis 2019 - 21th EG/VGTC Conference on Visualization, 2022

abstract

Abstract

With the constant increase in compute power of supercomputers, high performance computing simulations are producing higher fidelity results and possibly massive amounts of data. To keep visualization of such results interactive, existing techniques such as Adaptive Mesh Refinement (AMR) can be of use. In particular, Tree-Based AMR methods (TB-AMR) are widespread in simulations and are becoming more present in general purpose visualization pipelines such as VTK. In this work, we show how TB-AMR data structures could lead to more efficient exploration of massive data sets in the Exascale era. We discuss how algorithms (filters) should be designed to take advantage of tree-like data structures for both data filtering or rendering. By introducing controlled hierarchical data reduction we greatly reduce the processing time for existing algorithms, sometimes with no visual impact, and drastically decrease exploration time for analysts. Also thanks to the techniques and implementations we propose, visualization of very large data is made possible on very constrained resources. These ideas are illustrated on million to billion-scale native TB-AMR or resampled meshes, with the HyperTreeGrid object and associated filters we have recently optimized and made available in the Visualisation Toolkit (VTK) for use by the scientific community.

Zenodo, 2022

abstract

Abstract

This document feeds research and development priorities devel-oped by the European HPC ecosystem into EuroHPC’s Research and Innovation Advisory Group with an aim to define the HPC Technology research Work Programme and the calls for proposals included in it and to be launched from 2023 to 2026. This SRA also describes the major trends in the deployment of HPC and HPDA methods and systems, driven by economic and societal needs in Europe, taking into account the changes ex-pected in the technologies and architectures of the expanding underlying IT infrastructure. The goal is to draw a complete pic-ture of the state of the art and the challenges for the next three to four years rather than to focus on specific technologies, implementations or solutions.

2021

Tools for High Performance Computing 2018 / 2019, Springer International Publishing, p. 151-168, 2021

abstract

Abstract

The backtrace is one of the most common operations done by profiling and debugging tools. It consists in determining the nesting of functions leading to the current execution state. Frameworks and standard libraries provide facilities enabling this operation, however, it generally incurs both computational and memory costs. Indeed, walking the stack up and then possibly resolving functions pointers (to function names) before storing them can lead to non-negligible costs. In this paper, we propose to explore a means of extracting optimized backtraces with an O(1) storage size by defining the notion of stack tags. We define a new data-structure that we called a hashed-trie used to encode stack traces at runtime through chained hashing. Our process called stack-tagging is implemented in a GCC plugin, enabling its use of C and C++ application. A library enabling the decoding of stack locators though both static and brute-force analysis is also presented. This work introduces a new manner of capturing execution state which greatly simplifies both extraction and storage which are important issues in parallel profiling.

Abstract

Heterogeneous supercomputers are now considered the most valuable solution to reach the Exascale. Nowadays, we can frequently observe that compute nodes are composed of more than one GPU accelerator. Programming such architectures efficiently is challenging. MPI is the defacto standard for distributed computing. CUDAaware libraries were introduced to ease GPU inter-nodes communications. However, they induce some overhead that can degrade overall performances. MPI 4.0 Specification draft introduces the MPI Sessions model which offers the ability to initialize specific resources for a specific component of the application. In this paper, we present a way to reduce the overhead induced by CUDA-aware libraries with a solution inspired by MPI Sessions. In this way, we minimize the overhead induced by GPUs in an MPI context and allow to improve CPU + GPU programs efficiency. We evaluate our approach on various micro-benchmarks and some proxy applications like Lulesh, MiniFE, Quicksilver, and Cloverleaf. We demonstrate how this approach can provide up to a 7x speedup compared to the standard MPI model.

INTERNATIONAL JOURNAL OF PARALLEL PROGRAMMING, SPRINGER/PLENUM PUBLISHERS, p. 81-103, 2021

abstract

Abstract

Many applications of physics modeling use regular meshes on which computations of highly variable cost over time can occur. Distributing the underlying cells over manycore architectures is a critical load balancing step that should be performed the less frequently possible. Graph partitioning tools are known to be very effective for such problems, but they exhibit scalability problems as the number of cores and the number of cells increase. We introduce a dynamic task scheduling and mesh partitioning approach inspired by physical particle interactions. Our method virtually moves cores over a 2D/3D mesh of tasks and uses a Voronoi domain decomposition to balance workload. Displacements of cores are the result of force computations using a carefully chosen pair potential. We evaluate our method against graph partitioning tools and existing task schedulers with a representative physical application, and demonstrate the relevance of our approach.

Partie Méthodes numériques de la monographie, collection e-den, 2021

2021

abstract

Abstract

Methane (CH4) is one of the most contributing anthropogenic greenhouse gases (GHGs) in terms of global warming. Industry is one of the largest anthropogenic sources of methane, which are currently only roughly estimated. New satellite hyperspectral imagers, such as PRISMA, open up daily temporal monitoring of industrial methane sources at a spatial resolution of 30 m. Here, we developed the Characterization of Effluents Leakages in Industrial Environment (CELINE) code to inverse images of the Korpezhe industrial site. In this code, the in-Scene Background Radiance (ISBR) method was combined with a standard Optimal Estimation (OE) approach. The ISBR-OE method avoids the use of a complete and time-consuming radiative transfer model. The ISBR-OEM developed here overcomes the underestimation issues of the linear method (LM) used in the literature for high concentration plumes and controls a posteriori uncertainty. For the Korpezhe site, using the ISBR-OEM instead of the LM -retrieved CH4 concentration map led to a bias correction on CH4 mass from 4 to 16% depending on the source strength. The most important CH4 source has an estimated flow rate ranging from 0.36 ± 0.3 kg·s−1 to 4 ± 1.76 kg·s−1 on nine dates. These local and variable sources contribute to the CH4 budget and can better constrain climate change models.

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing ( Volume: 14), 2021

abstract

Abstract

Reflectance spectroscopy is a widely used technique for mineral identification and characterization. Since modern airborne and satellite-borne sensors yield an increasing number of hyperspectral data, it is crucial to develop unsupervised methods to retrieve relevant spectral features from reflectance spectra. Spectral deconvolution aims to decompose a reflectance spectrum as a sum of a continuum modeling its overall shape and some absorption features. We present a flexible and automatic method able to deal with various minerals. The approach is based on a physical model and allows us to include noise statistics. It consists of three successive steps: first, continuum pre-estimation based on nonlinear least-squares; second, pre-estimation of absorption features using a greedy algorithm; third, refinement of the continuum and absorption estimates. The procedure is first validated on synthetic spectra, including a sensitivity study to instrumental noise and a comparison to other approaches. Then, it is tested on various laboratory spectra. In most cases, absorption positions are recovered with an accuracy lower than 5 nm, enabling mineral identification. Finally, the proposed method is assessed using hyperspectral images of quarries acquired during a dedicated airborne campaign. Minerals such as calcite and gypsum are accurately identified based on their diagnostic absorption features, including when they are in a mixture. Small changes in the shape of the kaolinite doublet are also detected and could be related to crystallinity or mixtures with other minerals such as gibbsite. The potential of the method to produce mineral maps is also demonstrated.

Proceedings Volume 11727, Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXVII, 2021

abstract

Abstract

We present a fuzzy logic approach allowing the identification of minerals from re ectance spectra acquired by hyperspectral sensors in the VNIR and SWIR ranges. The fuzzy logic system is based on a human reasoning. It compares the positions of the main and secondary absorptions of the unknown spectrum (spectral characteristics estimated beforehand) with those of a reference database (derived from mineralogical knowledge). The proposed solution is first evaluated on laboratory spectra. It is then applied to airborne HySpex and satellite-borne PRISMA images acquired during a dedicated campaign over two quarries in France. This demonstrates the relevance of the method to automatically identify minerals in different mineralogical contexts and in the presence of mixtures.

EARSeL Joint Workshop - Earth Observation for Sustainable Cities and CommunitiesAt: Liège, Belgium, 2021

abstract

Abstract

Roof materials can be a significant source of pollution for the environment and can have negative health effects. Analyses of runoff water revealed high levels of metal traces but also polycyclic aromatic hydrocarbons and phthalates. This contamination would result from corrosion and alteration of roof materials. Similarly, the alteration or combustion of asbestos contained in certain types of roofs may allow the emission and dispersion of asbestos fibres into the environment. Therefore, acquiring information on roof materials is of great interest to decrease runoff water pollution, and to improve air and environmental quality around our homes. To this end, remote sensing is a particularly relevant tool since it allows semi-automatic mapping of roof materials using multispectral or hyperspectral data. The CASMATTELE project aims to develop a semi-automatic identification tool of roofing materials over the Liege area using remote-sensing and machine learning for public authorities.

Computer (Volume: 54, Issue: 12, December 2021), 2021

abstract

Abstract

We investigate the different levels of abstraction, linked to the diverse artifacts of the scientific software development process, that a software language can propose and the validation and verification facilities associated with the corresponding level of abstraction the language can provide to the user.

Proceedings of the 14th ACM SIGPLAN International Conference on Software Language Engineering, p. 2-15, 2021

abstract

Abstract